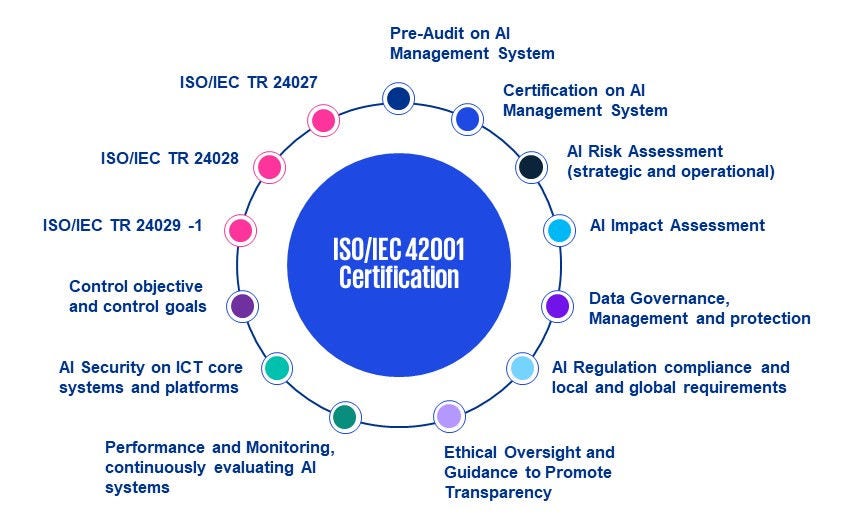

Artificial intelligence (AI) is transforming industries worldwide. Technologies like generative AI are revolutionizing finance, healthcare, manufacturing and customer service. While AI systems drive efficiency and innovation, they also present challenges in ethics, transparency and security. Organizations must adopt robust AI governance strategies to align with regulatory requirements and stakeholder expectations.

With increasing regulatory scrutiny, businesses need to proactively manage AI risks, including bias, data security and accountability. ISO/IEC 42001:2023 is the latest standard for an artificial intelligence management system (AIMS), offering a structured framework for AI governance. It helps organizations build trust, achieve AI compliance and align with international best practices. This standard ensures responsible development, deployment and operation which is a critical factor for successful AI adoption and broader digital transformation.